The facts about Cloaking

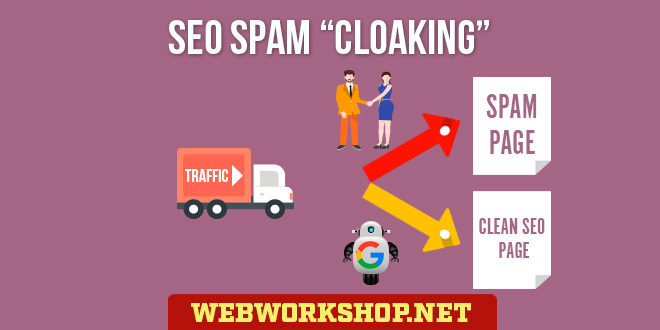

Cloaking is the technique of returning different pages to search engines than to people. When a person requests the page of a particular URL from the website, the site’s normal page is returned, but when a search engine spider makes the same request, a special page that has been created for the engine is returned, and the normal page for the URL is hidden from the engine – it is cloaked.

The usual purposes of cloaking are to hide the HTML code of high ranking pages from people, so that it can’t be stolen, and to provide search engine spiders with highly optimized pages that wouldn’t look particularly good in browsers.

There are three ways of cloaking. One is “IP delivery”, where the IP addresses of spiders are recognized at the server and handled accordingly; another is “User-Agent delivery”, where the spiders’ User-Agents are recognised at the server and handled accordingly, and the third is a combination of the two.

How cloaking works

Every website contains one or more ordinary webpages. For every page in the site that needs to be cloaked, another page is created that is designed to rank highly in a search engine. If more than one search engine is being targeted, then a page is created for each engine, because different engines have different criteria for ranking pages, and pages need to be created to match each engine’s criteria.

The search engine pages may be totally different to their ‘normal’ equivalents, or they may only be slightly different, or anywhere in between. For instance, a page may rank highly in a particular engine if it starts out with an additional paragraph of keyword-stuffed text, so a page like that is created for the engine.

When a request for a page comes in, a programme in the site detects what is making the request. If it is a person, then the normal page is returned, but if it is search engine spider, the appropriate search engine page is returned. In that way the engines never see the site’s normal page, and, of course, people never see the pages that are created for the search engines.

Dynamic sites work in the same way, except that the normal pages, and the search engines pages, are created dynamically. The search engine pages could even be static, while the normal pages are dynamic.

Is cloaking ethical?

Some people say that claoking is unethical, but they are mistaken. Their idea is that serving different pages to the engines than to people is simply wrong, because the engines are ranking the page according to what they believe it to be and not according to what it actually is. That idea is purely a matter of principle, and nothing at all to do with ethics.

The ethical view is that, if a page is ranked correctly, according to its topic, then surfers will see it listed in the search results, click on the listing, and find what they expected to find at the other end. It doesn’t matter how the page came to be ranked in that position, and it doesn’t matter if another page took its place when the engine was evaluating it. As long as the ranking is correct according to its topic, and the page at the other end is what was expected when clicking on the listing, then surfers are perfectly happy.

Cloaking can be used unethically, by sending people to sites and topics that they did not expect to go to when clicking on a listing in the search results, and that is an excellent reason to be against the misuse of cloaking, but it doesn’t mean that cloaking is unethical. It just means that, like many other things, it has unethical uses.

An example of how cloaking can actually help Google

Google’s crawlers won’t spider pages that have anything that looks like Session IDs in their URLs. If they did, they run the risk of spidering a potentially infinite number of pages, because each page that is requested would contain links to other pages, and the link URLs would contain the current session ID, which makes them different URLs than the last time the page was requested. And so it would go on and on and on, producing a vast number of unique URLs to spider and index.

It means that Google won’t spider most of the pages on some websites. But Google actually wants to spider most or all of each website’s pages. The solution is to cloak the pages. By spotting page requests from the Google spiders, and delivering modified pages without the normal Session IDs in the link URLs, Google is able to spider all of a site’s pages. This is precisely what Google wants, it’s what the website owner wants and, if asked, it would be what sufers want. It helps everybody and harms no-one.

This example alone demonstrates that cloaking is not intrinsically wrong or unethical. The technique can be used unethically, but it has various perfectly ethical uses.

Misuses of the word “cloaking”

Over the years, some people have mistakenly used the word “cloaking” to describe methods that are not cloaking. One of the mistakes is that all IP delivery is cloaking. It isn’t. IP delivery is delivering content on the basis of the IP address (just like cloaking), but it doesn’t involve creating special pages for search engines, and it doesn’t involve hiding a site’s normal pages from search engines, so it isn’t cloaking. The cloaking method is about creating and delivering special pages for search engines, and hiding the site’s normal pages from them.

Another mistake is that conditional auto-redirecting is cloaking. It isn’t. It’s conditional auto-redirecting. Search engines do it all the time, by sending people to their local search engine version when they type the .com address into the browser’s address bar. They use a person’s IP address to determine where s/he is in the world, and automatically redirect the person the local version of the engine.

Cloaking is the technique of creating special pages for search engines, delivering those pages to the engines when they request normal pages, and hiding the normal pages from them. Other methods have their own names, but they are not cloaking.

Cloaking was quite common when search engines used a page’s content to determine its ranking, but when Google became popular, and other big engines followed Google’s lead, rankings became mainly links-based. Page content is still used for rankings, but it doesn’t have the weight that it used to have, so cloaking isn’t as common now as it used to be. For this reason, it is probable that a great many people never learned how cloaking works, but they often come across the word being used, so they incorrectly attribute other things to cloaking, because the word “cloaking” could conceivably be used to describe them. But cloaking is a method that works in a very specific way, and using the word to describe other methods is a mistake that sometimes causes confusion.

Cloaking Software

Fantomas is widely recognised as the best cloaking software around. Information about it can be found here.